In this article, we will discuss the locking mechanism in C#. We will explain what locking is and why we need it. We will demonstrate and explain different ways how we can use the mechanism.

Let’s start.

What Is the Locking Mechanism in C#?

To make the applications more performant, we often make them multithreaded. This design prevents the blocking of computing resources while waiting for an operation to finish or waiting for the results from the external resource.

During the execution, different threads may need to access shared resources simultaneously. Shared resource examples are in-memory variables or external objects, such as files. If multiple threads change the shared resource simultaneously, the application could end up inconsistent, producing errors or even breaking.

Let’s demonstrate that:

public static class MultithreadWithoutLocking

{

private static int _counter = 0;

public static int Execute()

{

var thread1 = new Thread(IncrementCounter);

var thread2 = new Thread(IncrementCounter);

var thread3 = new Thread(IncrementCounter);

thread1.Start();

thread2.Start();

thread3.Start();

thread1.Join(); // main thread waiting until thread1 finishes

thread2.Join(); // main thread waiting until thread2 finishes

thread3.Join(); // main thread waiting until thread3 finishes

return _counter;

}

private static void IncrementCounter()

{

for (var cnt = 0; cnt < 100000; cnt++)

{

_counter++;

}

}

}

We define the MultithreadWithoutLocking class. Inside the class, we create and start three threads in its Execute() method, each executing the IncrementCounter() method. The method increases the _counter variable by 1 in the for loop.

Now, let’s call the execute method:

Console.WriteLine($"No lock result: {MultithreadWithoutLocking.Execute()}"); // No lock result: result will be different each time, but in almost all cases smaller than 300000

Each time we execute the method, the result will be different but always smaller than the expected value of 300 000. The _counter variable in this example is the shared resource that the three different threads modify simultaneously. Each ++ operation consists of three steps (read, modify, write), and two or more threads could read the _counter variable’s value simultaneously, increment it, and write the result with the same value for each thread.

The Atomic Operations

In our example, we refer to the concurrent methods that access the shared resource as critical sections. We want to make those methods atomic. The atomic methods will always produce the same result, no matter how many threads execute the method simultaneously. These methods will either finish successfully or fail without modifying or breaking the application state. We call that thread safety.

The most common way to ensure thread safety is by using the locking mechanism. With locks, we synchronize threads and control how different threads access the critical sections. Usually, when one thread executes the critical sections, the other threads can’t start with its execution or see its intermediate result until executing threads finish.

We also use locks to execute the methods in a specific order. For example, if one thread needs a result from the other, the second thread could use the lock to prevent the first thread from executing the method until the result is ready.

The .Net has a built-in class that ensures method atomicity, the Interlocked class. Let’s explore it in more detail.

Interlocked Class

We can use Interlocked class for the variables that are shared by multiple threads. This class can’t lock the entire block of code but can ensure a thread-safe simple operation on a single variable. It doesn’t block other threads. For more complex scenarios, for example, when we want to execute multiple lines of code, we will use a more advanced locking mechanism, like lock statement, Monitor class or Mutex class.

The Interlocked class has many methods, but they implement only simple operations. We can explore those methods in Microsoft Documentation. For example, to ensure the atomicity of incrementing the _counter variable, we can use the Interlocked.Increment() method:

public static int Increment(ref int location)

The method’s parameter is the variable we want to increment in a thread-safe way, as three threads are simultaneously incrementing it.

Let’s modify only the IncrementCounter() method from our example using this class:

private static void IncrementCounter()

{

for (var cnt = 0; cnt < 100000; cnt++)

{

Interlocked.Increment(ref _counter);

}

}

And, let’s call the Execute() method:

Console.WriteLine($"Interlocked result: {InterlockedClass.Execute()}"); // Interlocked result: 300000

The Exclusive Locking Mechanism in C#

Exclusive locks prevent any other thread from obtaining a lock on the locked object. That ensures that only one thread executes the critical section simultaneously.

Lock Statement

The lock statement exclusively locks an object, executes the statement, and eventually releases the object. When the thread locks the object, no other thread can execute this code block until the locking thread releases the object.

Let’s implement the solution for the initial problem using lock statement:

public static class LockStatement

{

private static int _counter;

private static readonly object _lockObject = new object();

public static int Execute()

{

var thread1 = new Thread(IncrementCounter);

var thread2 = new Thread(IncrementCounter);

var thread3 = new Thread(IncrementCounter);

thread1.Start();

thread2.Start();

thread3.Start();

thread1.Join();

thread2.Join();

thread3.Join();

return _counter;

}

private static void IncrementCounter()

{

lock (_lockObject)

{

for (var cnt = 0; cnt < 100000; cnt++)

{

_counter++;

}

}

}

}

And here is the call to the Execute() method:

Console.WriteLine($"Lock statement result: {LockStatement.Execute()}"); // Lock statement result: 300000

With the lock statement, we gain precise control over the scope of the lock. A field for locking, _lockObject in our example, can be any field accessible from involved threads, but it must be a reference type. The reason is that C# does automatic boxing of value types when we pass them to the method as an argument, and if we pass it to the lock statement, we will pass the new object every time.

We should use different lock objects if we have more than one critical sections to avoid deadlocks.

The code block under the lock statement should be short and executed in the shortest possible time.

We can also use the object which contains the critical section by using the this keyword. But that is not a good practice as it may result in deadlock, or the callers of the lock could use the containing object. We should also avoid using typeof instances and string literals.

The body of the lock statement can’t be asynchronous. In other words, we can’t use the await keyword inside the statement. The reason for that is that some other code executes while waiting await to return. This other code can require the same lock and produce the deadlock.

Monitor Class

The lock statement is syntactic sugar for calls to the methods Monitor.Enter() and Monitor.Exit() wrapped in try/finally block.

Let’s implement the locking mechanism using the Monitor class:

public static class MonitorClass

{

private static int _counter;

private static readonly object _lockObject = new();

public static int Execute()

{

var thread1 = new Thread(IncrementCounter);

var thread2 = new Thread(IncrementCounter);

var thread3 = new Thread(IncrementCounter);

thread1.Start();

thread2.Start();

thread3.Start();

thread1.Join();

thread2.Join();

thread3.Join();

return _counter;

}

private static void IncrementCounter()

{

try

{

Monitor.Enter(_lockObject);

for (var cnt = 0; cnt < 100000; cnt++)

{

_counter++;

}

}

finally

{

Monitor.Exit(_lockObject);

}

}

}

After that, we can call the Execute() method:

Console.WriteLine($"Monitor class result: {MonitorClass.Execute()}"); // Monitor class result: 300000

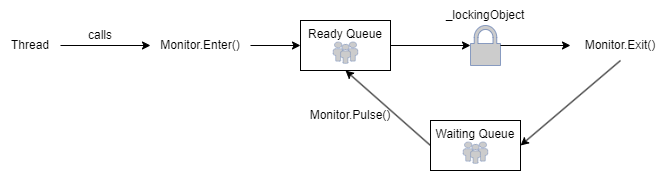

For a better understanding of the Monitor class, let’s look at the diagram:

When the thread calls the Monitor.Enter() method, it goes to the Ready Queue. There can be more than one thread in this queue, and only one acquires an exclusive lock on the _lockingObject. This thread enters the critical section and executes it. When the thread finishes its work, it calls the Monitor.Exit() method and goes to the Waiting Queue. From there, it calls the Monitor.Pulse() method to send a signal to the Ready Queue, and the next thread can acquire the lock on the critical section.

We can see that the Monitor class also implements other methods, Monitor.Pulse(), Monitor.PulseAll(), Monitor.Wait(), Monitor.TryEnter(), Monitor.IsEntered(). With them, we can have finer control over the execution of our threads. For example, we can pass the control to another thread even when one thread has not finished its work to obtain the results from another thread.

Mutex Class

We use the Mutex class, similar to lock statements and the Monitor class to synchronize and coordinate internal threads created and controlled within our application.

Here is the implementation of the locking mechanism using the Mutex class:

public class MutexClass

{

private static int _counter;

private static Mutex mutex = new();

public static int Execute()

{

var thread1 = new Thread(IncrementCounter);

var thread2 = new Thread(IncrementCounter);

var thread3 = new Thread(IncrementCounter);

thread1.Start();

thread2.Start();

thread3.Start();

thread1.Join();

thread2.Join();

thread3.Join();

return _counter;

}

private static void IncrementCounter()

{

try

{

if (mutex.WaitOne(1000))

{

for (var cnt = 0; cnt < 100000; cnt++)

{

_counter++;

}

}

}

finally

{

mutex.ReleaseMutex();

}

}

}

We create one instance of the Mutex class. When different threads enter the critical section, each calls the Mutex.WaitOne(int millisecondsTimeout) method. This method will try to acquire ownership over the mutex. It will block the thread until it is acquired or until the time-out interval elapses. If the method time-outs, the thread doesn’t have ownership and doesn’t execute the critical section. After the critical section, the thread releases ownership of the Mutex class by calling the Mutex.Release() method, and allows other threads to acquire it.

The Mutex class can also prevent external threads (for example, threads created by the operating system) from accessing our code.

Let’s look at the example of the Mutex class implementation doing that:

const string mutexName = "MutexDemo";

try

{

Mutex.OpenExisting(mutexName);

Console.WriteLine("More than one instance of the application is running");

}

catch

{

_ = new Mutex(false, mutexName);

Console.WriteLine("Only this instance of application is running");

}

When the application starts, it will try to access the mutex instance of the same name method Mutex.OpenExisting(string Name). Only one instance of the Mutex class can run on the same computer. If there is no such instance, it throws an exception, creating a new instance.

Let’s compile the demo application from the repository and run the application from the bin\Debug\net7.0 directory. With one instance running, let’s try running the same application’s second instance. We can see that the first run will print the message about one running instance, while the second will detect more instances.

This is the common use of the Mutex class, to detect or disable multiple instances of a program running simultaneously.

SpinLock Class

The SpinLock class is also used to acquire the exclusive lock, but unlike the lock statement and Monitor class, it doesn’t block the thread. Instead, the SpinLock spins in a loop, waiting to acquire a lock on the critical seconds, assuming it will soon be accessible. We call this technique busy waiting.

Blocking the threads involves suspending their executions, releasing computing resources to other threads, and switching the execution context, which can be a computationally expensive operation.

Let’s look at how we can implement the SpinLock mechanism:

public static class SpinLockClass

{

private static bool lockAquired;

private static int _counter;

private static SpinLock spinLock = new();

public static int Execute()

{

var thread1 = new Thread(IncrementCounter);

var thread2 = new Thread(IncrementCounter);

var thread3 = new Thread(IncrementCounter);

thread1.Start();

thread2.Start();

thread3.Start();

thread1.Join();

thread2.Join();

thread3.Join();

return _counter;

}

private static void IncrementCounter()

{

try

{

if (lockAquired)

{

Thread.Sleep(100);

}

spinLock.Enter(ref lockAquired);

for (var cnt = 0; cnt < 100000; cnt++)

{

_counter++;

}

}

finally

{

if (lockAquired)

{

lockAquired = false;

spinLock.Exit();

}

}

}

}

The SpinLock class uses the boolean variable passed by ref and sets it to true if the lock was successfully acquired. If the lock wasn’t acquired, we catch an exception. We can examine this variable to control entering the critical section. Regardless of the lock acquisition, we reset the variable in finally block, and we release the lock with the SpinLock.Exit() method.

The SpinLock should be used only for very short-running, fine-grained methods executed frequently due to their excessive spinning behavior. It is essential to carefully assess whether this locking mechanism improves the application’s performance. Additionally, note that SpinLock is a value type optimized for performance and should not be copied. If passing it as a parameter, we should pass it by reference.

The Non-Exclusive Locking Mechanism in C#

Non-exclusive locks allow other threads read-only access to the object and limit and control the number of concurrent accesses to a shared resource.

Semaphore Class

We use the Semaphore class to limit the number of threads accessing the limited pool of resources. The Semaphore constructor requires at least two arguments:

public Semaphore(int initialCount, int maximumCount)

The initialCount parameter determines how many threads can enter the Semaphore without being blocked. Those threads can enter the Semaphore immediately upon its creation. The maximumCount parameter determines how many threads can be simultaneously in the Semaphore, whether executing the code or waiting for enter.

Here is an example of the Semaphore with an initial count of two and a maximum count of four:

public static class SemaphoreClass

{

public static readonly Semaphore semaphore = new(2, 4);

public static void Execute()

{

for (var cnt = 0; cnt < 6; cnt++)

{

Thread thread = new(DoWork)

{

Name = "Thread " + cnt

};

thread.Start();

}

}

private static void DoWork()

{

try

{

Console.WriteLine($"Thread {Thread.CurrentThread.Name} waits the lock");

semaphore.WaitOne();

Console.WriteLine($"Thread {Thread.CurrentThread.Name} enters critical section");

Thread.Sleep(500);

Console.WriteLine($"Thread {Thread.CurrentThread.Name} exits critical section");

}

finally

{

Console.WriteLine($"Thread {Thread.CurrentThread.Name} releases the lock");

semaphore.Release();

}

}

}

We start six threads parallel, and each of them executes the DoWork() method. In this method, the thread waits for the lock with the WaitOne() method. After the thread finishes its work, it releases the lock with a call to the Release() method.

The Semaphore class doesn’t have the concept of an owner thread, like Mutex or the lock statement. Instead, any thread can release a permit by invoking the Release() method on a Semaphore instance. This flexibility allows multiple threads to release locks, enabling other waiting threads to acquire them and proceed.

Furthermore, it’s worth noting that the Semaphore class can be used with both internal and external threads, similar to Mutex. On the other hand, the SemaphoreSlim class, a lightweight Semaphore version, is designed for internal thread synchronization and coordination.

ReaderWriterLockSlim Class

The ReaderWriterLockSlim class is a locking mechanism in C# that provides two types of locks: the reader lock and the writer lock. When a thread acquires the writer lock, it blocks all other read and write threads from entering the critical section. However, multiple threads can acquire the writer lock concurrently if no other writer lock is currently held.

Let’s take a look at an example of implementation:

public static class ReaderWriterLockSlimClass

{

private static int _counter;

private static readonly ReaderWriterLockSlim _readerWriterLockSlim = new();

public static int Execute()

{

var readThread1 = new Thread(Read);

var readThread2 = new Thread(Read);

var readThread3 = new Thread(Read);

readThread1.Start(1);

readThread2.Start(2);

readThread3.Start(3);

var writeThread1 = new Thread(Write);

var writeThread2 = new Thread(Write);

writeThread1.Start(1);

writeThread2.Start(2);

readThread1.Join();

readThread2.Join();

readThread3.Join();

writeThread1.Join();

writeThread2.Join();

return _counter;

}

private static void Read(object? id)

{

while (_counter < 30)

{

_readerWriterLockSlim.EnterReadLock();

Console.WriteLine($"Reader {id} read counter: {_counter}");

_readerWriterLockSlim.ExitReadLock();

Thread.Sleep(100);

}

}

private static void Write(object? id)

{

while (_counter < 30)

{

_readerWriterLockSlim.EnterWriteLock();

_counter++;

Console.WriteLine($"Writer {id} increased counter to value {_counter}");

_readerWriterLockSlim.ExitWriteLock();

Thread.Sleep(100);

}

}

}

We start with three reader and two writer threads.

The reader threads execute the Read(object? id) method where the argument is the integer passed in the Thread.Start() method. The Read() method acquires the lock with EnterReadLock(). Multiple threads can acquire the lock with this method. Then it prints the _counter variable’s value and releases the lock with a call to the ExitReadLock() method.

The writer threads execute the Write(object? id) method with the same argument. This method acquires the writer lock with a call to the EnterWriteLock() method. Only one thread can acquire this lock. After incrementing the _counter variable, it releases the lock with a call to the ExitWriteLock() method.

Conclusion

The locking mechanism in C# plays a vital role in multithreaded applications by ensuring thread safety and synchronized access to share resources. It provides a simple and effective way to coordinate the execution of threads, maintaining the consistency of the application’s state.

However, we should use the locks carefully to avoid deadlocks and performance degradation.