As the popular saying suggests, “Divide and Rule”, the same principle can be applied in software development. There is an architectural pattern known as “Pipes and Filters”, which is used to accomplish just that. The idea is to break down a complex task into smaller tasks and execute them sequentially. In this article, we will delve deeper into this pattern and learn how it works.

With that, let’s dive in.

Understanding Pipes and Filters Architectural Pattern

Software developers utilize the Pipes and Filters architectural design pattern to establish a modular and flexible system for sequentially and independently processing data or tasks. This pattern proves invaluable when dealing with data streams that require traversal through multiple stages, and each stage is responsible for specific data transformations or operations. It is a prevalent architectural pattern in applications related to data processing pipelines, text processing, and similar scenarios.

Now, let’s delve deeper to gain a better understanding of how this design pattern works and its fundamental concepts.

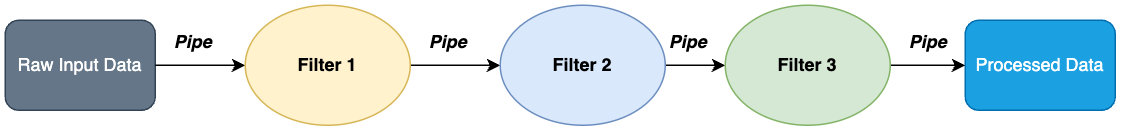

The Pipes and Filters pattern is a component-based architectural design pattern. It comprises multiple components known as “Filters”. Each filter is responsible for executing a specific data operation. Importantly, these filters intentionally operate in isolation from one another, ensuring that they have no direct knowledge of their position within the pipeline’s sequence. These filters are interconnected within the architecture through pipes, which serve as conduits for seamlessly transmitting data from one filter to the next.

These pipes facilitate filter communication by enabling smooth data transfer from one processing stage to the next. This data processing methodology inherently follows a sequential order. In other words, data progresses through the filters step by step, with each filter actively applying its designated operation to the data:

Different-colored ellipses mark all the filters, and a pipe connects them. Raw data flows through these filters, yielding processed data or the final output. This sequential approach not only simplifies the modular design but also provides a readily extendable structure because it allows for the addition or removal of filters without disrupting the overall system.

Now, let’s examine an implementation of this pattern.

An Example Implementation

We’ll be implementing a mock “Sentiment Analyzer Bot.” The purpose of this bot is to provide a sentiment value (“Positive,” “Negative,” “Neutral”) based on the input text provided to it. This scenario fits well into any text-processing pipeline. So, we shall be creating three filters connected via a pipe.

Let’s first start by creating these filters.

Creating the Filters

The IFilter interface will serve as the foundation for the subsequent development of our sentiment analysis filters and their integration into the processing pipeline. Let’s create it:

public interface IFilter

{

public string Process(string input);

}

The IFilter interface includes the Process() method, which takes a string as an input parameter and returns a string as output. So, now that we have set up the interface, we can proceed to implement our first filter.

Our first filter will convert input text to lowercase, preparing it for further analysis in the sentiment analyzer pipeline:

public class LowerCaseFilter : IFilter

{

public string Process(string input)

{

return input.ToLower();

}

}

The LowerCaseFilter class implements the IFilter interface. The Process() method converts the input string to lowercase using the ToLower() method.

Let’s move on to the next filter, which is the removal of stop words:

public class RemoveStopWordsFilter : IFilter

{

private static readonly HashSet<string> StopWords = new()

{

"a", "an", "and", "are", "as", "at", "be", "but", "by", "my", "not", "of", "on", "or", "the", "to"

};

public string Process(string input)

{

var words = input.Split(new[] { " ", "\t", "\n", "\r" }, StringSplitOptions.RemoveEmptyEntries);

var filterWords = words.Where(x => !StopWords.Contains(x));

return string.Join(" ", filterWords);

}

}

Here, we remove StopWords from the input string after splitting it into an array using the usual text separators. Finally, it filters out the words in the StopWords HashSet and then returns the final filtered string.

With two filters done, now comes the final one, which will analyze the actual sentiment:

public class SentimentFilter : IFilter

{

public string Process(string input)

{

var positiveWords = new HashSet<string>

{

"good", "great", "awesome", "fantastic", "happy", "love", "like"

};

var negativeWords = new HashSet<string>

{

"bad", "terrible", "awful", "hate", "dislike", "sad"

};

var words = input.Split(new[] { " ", "\t", "\n", "\r" }, StringSplitOptions.RemoveEmptyEntries);

var positiveCount = words.Count(x => positiveWords.Contains(x));

var negativeCount = words.Count(x => negativeWords.Contains(x));

if (positiveCount > negativeCount) return "Positive";

return negativeCount > positiveCount ? "Negative" : "Neutral";

}

}

Our final filter class,SentimentFilter, analyzes text input for sentiment and categorizes it as “Positive,” “Negative,” or “Neutral” based on the occurrence of predefined positive and negative words. It counts the occurrences of these words in the input text and determines the sentiment based on which type of word (positive or negative) is more prevalent.

Now that filters are created, it’s time to connect them to the pipe.

Create the Pipe

Let’s combine our filters in the SentimentAnalyzerPipe class:

public static class SentimentAnalyzerPipe

{

public static string Analyze(string text)

{

IFilter[] sentimentPipeLine =

{

new LowerCaseFilter(),

new RemoveStopWordsFilter(),

new SentimentFilter()

};

return sentimentPipeLine.Aggregate(text, (current, filter) => filter.Process(current));

}

}

We create the sentimentPipeLine by initializing the array of IFilter type. Processing each filter sequentially in the pipeline is crucial. In our case, the sequence is LowerCaseFilter => RemoveStopWordsFilter => SentimentFilter. Then, we loop into the array using the LINQ’s Aggregate() method and process each filter by invoking their Process() method.

Now that we have set all filters and their connecting pipe let’s execute the pipeline.

Execute the Pipe

With our pipe created, let’s analyze some text:

var positiveSentiment = SentimentAnalyzerPipe.Analyze("I am happy");

var negativeSentiment = SentimentAnalyzerPipe.Analyze("I am sad");

var neutralSentiment = SentimentAnalyzerPipe.Analyze("I am ok");

We analyze the sentiment of three input sentences using the SentimentAnalyzerPipe. It assigns the sentiment analysis results to three variables: positiveSentiment, negativeSentiment, and neutralSentiment. The output is “Positive”, “Negative” and “Neutral”, respectively.

Moving forward, let’s list the pros and cons of this pattern to determine its appropriate use.

Pros of Pipes and Filters

The Pipes and Filters pattern offers several advantages. Firstly, it promotes modularity, making the development, testing, and maintenance of individual components easier, enhancing the overall robustness of the system. Additionally, the system provides flexibility, allowing filters to be added, removed, or replaced without disrupting the entire pipeline.

This adaptability makes it responsive to changing requirements. Furthermore, filters promote reusability, reducing code duplication and increasing efficiency by allowing their use in different pipelines.

Finally, by introducing parallelism, the system can enhance its performance in resource-intensive processing scenarios, making it highly scalable.

Cons of Pipes and Filters

However, there are some disadvantages to consider. Firstly, managing the data flow between filters and handling error conditions can introduce complexities into the system’s architecture. Therefore, careful consideration is required to maintain a streamlined workflow.

Moreover, when data must traverse numerous filters, additional processing can introduce latency, affecting real-time processing capabilities. Hence, this latency should be carefully managed.

Additionally, implementing interactions or dependencies between filters can be challenging due to the intentional isolation of filters. This design, while promoting modularity, may require thoughtful solutions when inter-filter communication is necessary.

Conclusion

The Pipes and Filters pattern is an architectural design that enables the decomposition of a complex task into smaller, independent components referred to as “Filters”. Then, we use Pipes to interconnect these filters, facilitating the smooth data flow between them. This design is effective because it allows for the modularization of complex tasks and, as a result, it promotes a streamlined and efficient data processing pipeline.