In this article, we are going to learn how to handle uploading large files in ASP.NET Core. We are going to talk about why using byte[] or MemoryStream can be problematic and discuss the benefits of using streams instead.

By leveraging streams, we can significantly improve the performance and scalability of our application when handling large files.

Let’s start.

VIDEO: Upload Large Files in ASP.NET Core Web API.

Advantages of Using Streams over byte[] or MemoryStream for Large File Handling

Streams are generally better than using byte[] or MemoryStream when handling large files for several reasons.

First, streams can significantly reduce memory usage. With byte[] or MemoryStream, the entire file is loaded into memory before processing, potentially causing problems such as performance issues or memory errors, particularly with large files. In contrast, streams process the file in blocks, enabling more efficient memory management.

Secondly, using streams can also improve processing speed by allowing for simultaneous reading and writing of the file. This means the application can start processing the file faster and finish more efficiently.

Finally, using streams also benefits by enabling the direct streaming of large files over the network. Loading the entire file into memory before sending it, which can be slow and inefficient, is not necessary with byte[] or MemoryStream. Streams transmit data in blocks, reducing latency and improving transmission performance.

Enabling Kestrel Support for the Large Files

We need to establish certain prerequisites and configurations before starting the development process.

Firstly, we need to configure Kestrel in the Program.cs file to allow the upload of large files.

builder.WebHost.ConfigureKestrel(serverOptions =>

{

serverOptions.Limits.MaxRequestBodySize = long.MaxValue;

});

It is worth noting that by setting the MaxRequestBodySize to long.MaxValue, we are allowing the uploading of files of any size. We can configure this according to our needs and requirements. So we should be careful with this.

Also instead of globally increasing the request limit size, we can use the [RequestSizeLimit(<Size in bytes>)] to specifically increase the request size limit for the specific action only.

Next, we need to create a new class called FileUploadSummary to handle the responsibility for the file upload POST request:

public class FileUploadSummary

{

public int TotalFilesUploaded { get; set; }

public string TotalSizeUploaded { get; set; }

public IList<string> FilePaths { get; set; } = new List<string>();

public IList<string> NotUploadedFiles { get; set; } = new List<string>();

}

We also utilize the MultipartFormDataAttribute action filter to validate the content type of incoming requests:

[AttributeUsage(AttributeTargets.Method | AttributeTargets.Class)]

public class MultipartFormDataAttribute : ActionFilterAttribute

{

public override void OnActionExecuting(ActionExecutingContext context)

{

var request = context.HttpContext.Request;

if (request.HasFormContentType

&& request.ContentType.StartsWith("multipart/form-data", StringComparison.OrdinalIgnoreCase))

{

return;

}

context.Result = new StatusCodeResult(StatusCodes.Status415UnsupportedMediaType);

}

}

This ensures that the requests content-type the header is correct and starts with a multipart/form-data string before executing the Upload method in the FileController or else it will return a 415 Unsupported Media Type response.

Upload Large Files Using Streams

Before we start modifying our controller, we have to add one more attribute to our solution:

[AttributeUsage(AttributeTargets.Class | AttributeTargets.Method)]

public class DisableFormValueModelBindingAttribute : Attribute, IResourceFilter

{

public void OnResourceExecuting(ResourceExecutingContext context)

{

var factories = context.ValueProviderFactories;

factories.RemoveType<FormValueProviderFactory>();

factories.RemoveType<FormFileValueProviderFactory>();

factories.RemoveType<JQueryFormValueProviderFactory>();

}

public void OnResourceExecuted(ResourceExecutedContext context)

{

}

}

We have to disable the model validation because we don’t want model binders to inspect the body and fetch it into memory or onto disk. This would beat the purpose of using streams.

Now, let’s take a look at controller action that uses streams to upload large files:

[HttpPost("upload-stream-multipartreader")]

[ProducesResponseType(StatusCodes.Status201Created)]

[ProducesResponseType(StatusCodes.Status415UnsupportedMediaType)]

[MultipartFormData]

[DisableFormValueModelBinding]

public async Task<IActionResult> Upload()

{

var fileUploadSummary = await _fileService.UploadFileAsync(HttpContext.Request.Body, Request.ContentType);

return CreatedAtAction(nameof(Upload), fileUploadSummary);

}

Here, we have an action method with an HTTP POST attribute that specifies the upload endpoint. It also has a custom action filter attribute MultipartFormData which ensures that the incoming request has the correct content type of multipart/form-data. Of course, we call the DisableFormValueModelBinding filter, to disable model validation.

The method uses the UploadFileAsync method from the _fileService service to upload the file. The UploadFileAsync method takes in the request body stream and content type, which are obtained from the HttpContext.Request.Body and Request.ContentType properties respectively.

Finally, the method returns a 201 Created result with an fileUploadSummary object that contains information about the uploaded file.

Now, let’s take a look at how we can further improve this implementation by introducing a FileService class. This class provides a service method that reads files from the request body streams and saves them to a folder:

public async Task<FileUploadSummary> UploadFileAsync(Stream fileStream, string contentType)

{

var fileCount = 0;

long totalSizeInBytes = 0;

var boundary = GetBoundary(MediaTypeHeaderValue.Parse(contentType));

var multipartReader = new MultipartReader(boundary, fileStream);

var section = await multipartReader.ReadNextSectionAsync();

var filePaths = new List<string>();

var notUploadedFiles = new List<string>();

while (section != null)

{

var fileSection = section.AsFileSection();

if (fileSection != null)

{

totalSizeInBytes += await SaveFileAsync(fileSection, filePaths, notUploadedFiles);

fileCount++;

}

section = await multipartReader.ReadNextSectionAsync();

}

return new FileUploadSummary

{

TotalFilesUploaded = fileCount,

TotalSizeUploaded = ConvertSizeToString(totalSizeInBytes),

FilePaths = filePaths,

NotUploadedFiles = notUploadedFiles

};

}

The UploadFileAsync method uploads files to the server and returns a summary of the upload. It reads the multipart data from the input stream and saves each file to disk. It also calculates the total size of the uploaded files and the number of files uploaded. The FileUploadSummary includes the total size and number of uploaded files, the paths to the uploaded files, and a list of files that could not be uploaded.

You can check the entire code of the service using our GitHub repo.

Uploading Large Files with Postman

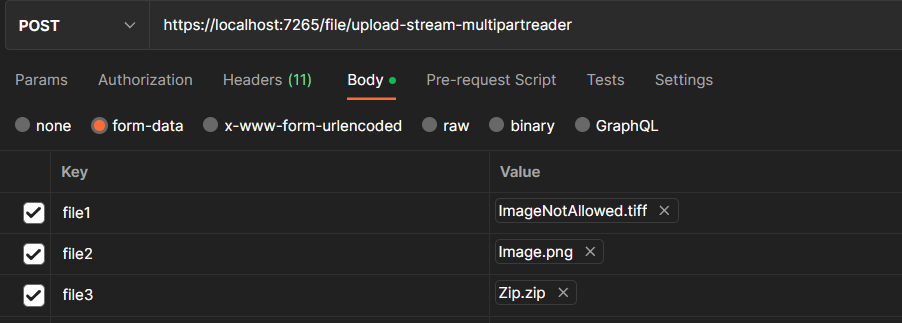

Now, let’s take a look at how we can send files using Postman:

We need to select the form-data option and add two key-value pairs where the key will be the name of the file, and the value will be the file itself. We also need to set the Content-Type header to "multipart/form-data; boundary=some value" the header tab in Postman.

Here we have the server response:

{

"totalFilesUploaded": 3,

"totalSizeUploaded": "5.93MB",

"filePaths": [

"path_to_file\\Image.png",

"path_to_file\\FilesUploaded\\Zip.zip"

],

"notUploadedFiles": [

"ImageNotAllowed.tiff"

]

}

The response shows the total number of files uploaded, the total size of the files, and the paths of the uploaded files. Additionally, there is a list of files that were not uploaded due to not being allowed.

And here we have the response we would receive if we try to send a POST request with a Content-Type other than multipart/form-data:

{

"type": "https://tools.ietf.org/html/rfc7231#section-6.5.13",

"title": "Unsupported Media Type",

"status": 415,

"traceId": "00-eade1095704664c0559a58157b192b0c-a6b99260dc6e82ce-00"

}

The response includes a 415 Unsupported Media Type status code along with a traceId to help with debugging.

Conclusion

Uploading large files can be a challenging task, but utilizing streams can greatly simplify the process. With streams, we can efficiently read and write data in small chunks, minimizing memory usage and allowing us to handle large files without performance issues.

By implementing the techniques and strategies we have covered in this article, we can now confidently handle large file uploads in our ASP.NET Core applications.