In this article, we embark on a journey to explore the depths of the ConcurrentQueue class in C#.

We’ll delve into its fundamental concepts, its usage, and its role in ensuring thread safety while enabling parallelism. By the end, we’ll have a comprehensive understanding of how to utilize the ConcurrentQueue class to its fullest potential, enabling us to build efficient and responsive applications that gracefully handle concurrent data processing.

So, let’s dive in and uncover the world of the ConcurrentQueue class, where safe multi-threaded operations and parallelism converge to empower our C# applications.

Understanding ConcurrentQueue

Firstly, we will lay the foundation by exploring the fundamental concepts behind queues in data processing and introducing the ConcurrentQueue class within the System.Collections.Concurrent namespace.

Queue Concept in Data Processing

Before we dive into the specifics of the ConcurrentQueueclass, let’s take a moment to understand the importance of queues in the realm of data processing.

From the data structures perspective, we can talk about two types of queues – first-in, first-out (FIFO) and last-in, first-out (LIFO).

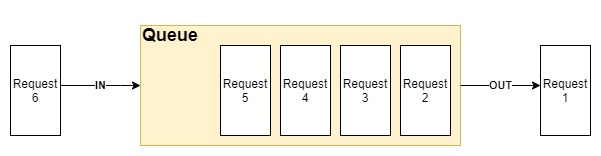

A queue is a fundamental data structure that operates on the principle of first in first out (FIFO). This means that the first element added to the queue is the first one to be removed:

As we can see above, the incoming requests form a queue and await processing by appropriate handlers.

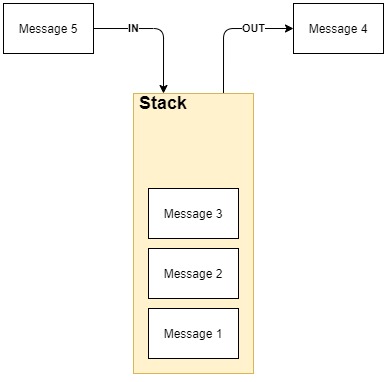

On the other hand, a stack is an example of a queue that operates on the principle of last-in, first-out (LIFO). In this case, it is the last element added to the queue that will be removed in the first place:

As we can see, incoming messages are stacked one on another and only the top element can be retrieved for processing.

While both stacks and queues are types of data structures, it is important to emphasize that they are fundamentally distinct. Stacks and queues serve unique purposes and exhibit different behaviors. They can both be considered arrays or collections of items, but it is crucial that we recognize them as separate and independent first-class data structures.

As we can see, queues and stacks play a crucial role in managing data in scenarios where the order of processing matters. They find applications in tasks like managing print jobs in a printer queue, handling requests in web servers, and more.

ConcurrentQueue and Concurrency

Now that we’ve grasped the significance of queues, let’s introduce the star of our article: the ConcurrentQueue class.

The ConcurrentQueue class is part of the System.Collections.Concurrent namespace in C#, which offers a collection of thread-safe data structures designed for use in multi-threaded applications.

The ConcurrentQueue class primarily facilitates data management across multiple threads, eliminating the need for explicit synchronization mechanisms. ConcurrentQueue ensures safe enqueueing and dequeuing operations from different threads, preventing data corruption and race conditions.

This makes it an indispensable tool when building applications that require efficient parallelism and synchronization.

In addition to the ConcurrentQueue class, the System.Collections.Concurrent namespace provides other thread-safe collections, such as ConcurrentDictionary, ConcurrentBag, and ConcurrentStack classes.

Basic Usage of ConcurrentQueue in C#

Now, let’s dive into the practical aspects of using the ConcurrentQueue class in our C# applications.

Instantiating ConcurrentQueue

First, before we even start using a ConcurrentQueue class, we need to create an instance of it.

We can create an empty queue like so:

ConcurrentQueue<int> myQueue = new ConcurrentQueue<int>();

Alternatively, in case we already have a list of elements that we would like to add to the queue we can use a constructor that accepts a collection of elements:

ConcurrentQueue<int> myQueue = new ConcurrentQueue<int>(itemsList);

This will create a new ConcurrentQueue instance and add all elements from the itemsList instance to it.

Enqueueing Items

Afterwards, we can use the Enqueue method to add elements to our queue in a safe and orderly manner:

myQueue.Enqueue(item);

As simple as that, we have added an item instance to our queue.

Now, what if we want to enqueue multiple items at once? Unfortunately, by design, we are limited to adding our items one by one to the queue:

for (int i = 0; i < 10000; i++)

{

myQueue.Enqueue(i);

}

However, as we mentioned previously, we can leverage a constructor to transform a list of our items into a new ConcurrentQueue instance.

Dequeueing Items

Equally important is dequeuing items from our queue, which coincidentally is just as straightforward as enqueuing them.

We can use the TryDequeue method to safely retrieve and remove an item from the queue:

if (myQueue.TryDequeue(out var item))

{

// Process item

}

The TryDequeue method not only dequeues an item but also indicates whether the operation was successful.

For example, when we try to dequeue a message from an empty queue, instead of throwing an exception the method would simply return falseas a result. For that reason, it is crucial that we check the return value and handle potential failure conditions appropriately.

Iterating a ConcurrentQueue

Iterating through the contents of a queue is a relatively uncommon operation when processing data and it might contradict the purpose of the queue.

Surprisingly, C# allows for such an operation. What’s more, it is constructed in such a way that it fits into the principle of multithreading and parallelism.

As we can read in the official documentation – the GetEnumerator method creates a snapshot of our queue allowing us to safely iterate through its content.

Therefore, it is important to remember that this snapshot won’t reflect any changes to the queue that we make after invoking the GetEnumerator method.

We can iterate over a queue by using a foreach loop:

foreach (var item in myQueue)

{

// Process item

}

This allows us to process each item in the queue separately from our handlers.

TryPeek Method

Next, the TryPeek method is a valuable addition to the ConcurrentQueue class that allows us to examine the item at the front of the queue without removing it.

This is particularly useful when we want to inspect the next item to be processed without altering the queue’s state:

if (myQueue.TryPeek(out var item))

{

// Process item (without removing it)

}

Additionally, TryPeek method gracefully handles the scenario in which the queue is empty, preventing exceptions and ensuring smooth operation. For that reason, we need to validate the return value by the same token as for the TryDequeue method.

Count and Clear Methods

Lastly, managing the state of a queue often involves checking the number of items it contains and clearing it when necessary.

The ConcurrentQueue class provides us with two ways to handle these tasks:

myQueue.Count; myQueue.Clear();

The Count property allows us to quickly check how many items are currently in the queue. This can be beneficial for monitoring the queue’s workload.

The Clear method efficiently removes all items from the queue, leaving it empty and ready for new data. This can be handy when we need to reset the queue’s state or perform periodic maintenance.

Thread Safety and Concurrent Operations

In light of all the practical information we’ve gathered, let’s delve into the crucial aspects of thread safety when using the ConcurrentQueue class and explore how it manages concurrent operations.

Challenges of Multi-Threaded Data Management

Multi-threaded data management introduces several challenges that can lead to data access issues, race conditions, and even data corruption. These challenges arise when multiple threads attempt to read, write, or modify data simultaneously.

Some common issues include:

- Race conditions that occur when two or more threads access shared data concurrently; the final state of the data may depend on the order of execution, leading to unpredictable results

- Data corruption as a result of simultaneous writes to shared data without proper synchronization

- Deadlock scenarios in which threads get stuck waiting for resources held by other threads, causing the application to freeze

Understanding these challenges is crucial for appreciating the value of thread-safe data structures like these specialized queues for mitigating these problems.

Internal Thread Safety Mechanisms

For a better understanding of the ConcurrentQueue class, let’s take a further peek into the internal mechanisms it uses to deal with managing the complexities of concurrent operations.

One of the primary strategies that the ConcurrentQueue class employs to ensure thread safety is lock-free algorithms.

These algorithms allow multiple threads to perform operations concurrently without requiring explicit locking mechanisms like mutexes or monitors.

The ConcurrentQueue class utilizes lock-free algorithms to manage concurrent enqueue and dequeue operations efficiently. This means that even in scenarios with a high level of contention, where multiple threads are accessing the queue simultaneously, the data structure can maintain performance and responsiveness.

Another crucial mechanism within ConcurrentQueue is the use of CAS (Compare-And-Swap) operations.

It enables a thread to compare the current value of a memory location with an expected value and update it only if the current value matches the expected one.

This atomicity ensures that concurrent updates do not interfere with each other.

Lastly, memory barriers are synchronization primitives that control the visibility and ordering of memory operations between threads.

The ConcurrentQueue class employs memory barriers strategically to ensure that whenever a thread applies memory updates then they are visible to other threads in a predictable and orderly fashion. This guarantees that operations on the queue’s internal data structure are coordinated correctly across threads.

While ensuring thread safety is paramount, ConcurrentQueue also aims to provide balanced performance. It achieves this by carefully balancing the trade-offs between synchronization and concurrency.

Implementing the Producer-Consumer Pattern

Lastly, we’ll explore how we can leverage the ConcurrentQueue class to implement the producer-consumer pattern, a common scenario in multi-threaded applications where one or more threads produce data, and one or more threads independently consume and process that data at the same time.

Understanding the Producer-Consumer Pattern

Before diving into the implementation details, let’s flesh out the producer-consumer pattern a bit.

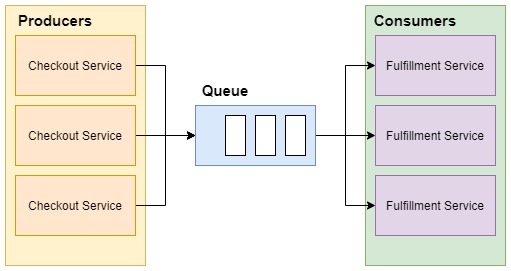

It is a synchronization design pattern where producers generate data, and consumers consume and process that data:

In this diagram, we can see an example implementation of the producer-consumer pattern. In this case, the Checkout service acts as a producer, and the Fulfillment service acts as a consumer. Multiple instances of each of those services are connected by a single message queue which transports requests between them.

This pattern is essential for building parallel and efficient systems, and it often requires robust synchronization mechanisms.

Implementing Queue

Now, what would the implementation of such a pattern look like?

It requires implementing three components: consumer, producer, and message bus.

First, let’s implement a message bus:

public class OrderMessageBus : IMessageBus<Order>

{

private readonly ConcurrentQueue<Order> _queue = new ConcurrentQueue<Order>();

public void Add(Order order)

{

if (order == null) throw new ArgumentNullException(nameof(order));

_queue.Enqueue(order);

}

public bool Fetch(out Order order)

{

return _queue.TryDequeue(out order);

}

}

In our example, the OrderMessageBus class is a simple wrapper over ConcurrentQueue<Order> and via the IMessageBus<Order> interface it exposes Add and Fetch methods.

The Add method allows us to call the Enqueue method of our private _queue field. Additionally, it provides us with a guardrail against adding null objects.

In a similar fashion, the Fetch method allows us to use the TryDequeue method of our internal queue.

Implementing Producer

Next, let’s implement a producer that will feed messages to our queue:

public class Producer : IProducer

{

private readonly IMessageBus<Order> _messageBus;

public Producer(IMessageBus<Order> messageBus)

{

_messageBus = messageBus;

}

public void SendMessage(Order order)

{

_messageBus.Add(order);

}

}

As we can see above, our Producer class accepts a IMessageBus<Order> instance in the constructor and uses it internally to publish new order requests to the message bus.

Implementing Consumer

Lastly, we need to create our consumer class:

public class Consumer : IConsumer

{

private readonly IMessageBus<Order> _messageBus;

private readonly IFulfillmentService _fulfillmentService;

public Consumer(IMessageBus<Order> messageBus, IFulfillmentService fulfillmentService)

{

_messageBus = messageBus;

_fulfillmentService = fulfillmentService;

}

public async Task Process()

{

Order order;

while (true)

{

if (_messageBus.Fetch(out order))

{

await _fulfillmentService.Fulfill(order);

}

}

}

}

The Consumer class is responsible for consuming and processing orders from a message bus asynchronously.

It takes two dependencies during initialization: an IMessageBus<Order> instance that is used to consume messages from the queue and an IFulfillmentService instance used to further process requests.

The Process method starts a new thread that continuously listens for incoming orders from a message bus and processes them concurrently using the _fulfillmentService.

Performance Considerations for ConcurrentQueue

Lastly, when utilizing the ConcurrentQueue class in our multi-threaded applications, it is essential that we consider various performance factors to ensure optimal execution.

Snapshot Operation and Iteration Costs

When we iterate over a ConcurrentQueue instance, we should be mindful of the potential performance impact, especially in scenarios with large queues. For example, the snapshot operation performed during iteration can be expensive in terms of both time and memory if we perform it on large queues.

Memory Usage

The ConcurrentQueue class provides us with extra thread safety, which adds an overhead to its memory usage compared to a regular Queue.

While this may not be a concern in most cases, we should consider if memory efficiency is a critical factor in our application.

Imbalanced Workloads

In producer-consumer scenarios, where producers enqueue items and consumers dequeue them, an imbalance in incoming values can lead to an indefinite growth of the queue and high memory usage.

Therefore, we should ensure that the rate of enqueuing and dequeuing is well-balanced to prevent unintended memory growth.

Latency Considerations

While ConcurrentQueue is optimized for throughput and can handle high levels of concurrent operations efficiently, individual Enqueue and Dequeue operations may not always provide the lowest latency.

Latency can vary based on system load, the number of threads interacting with the queue, and the complexity of the data being enqueued or dequeued.

We should be mindful of latency requirements in our application and conduct performance testing accordingly.

Potential Bottlenecks

Improper usage of the ConcurrentQueue class can lead to bottlenecks. For instance, frequent and rapid filling and emptying of the queue can result in cache invalidation issues, which may impact performance negatively. We must design our application in a way that minimizes such bottlenecks.

Batch Operations

As we have seen earlier, the ConcurrentQueue class does not handle batch enqueue or dequeue operations automatically.

If our application requires batching, adding custom code to implement these operations might be necessary.

However, it is important to note that poorly designed batch operations can have adverse effects on performance. Therefore we should carefully consider the trade-offs and benchmark the impact of custom batch handling.

Conclusion

In this exploration of a ConcurrentQueueclass in C#, we’ve unpacked a powerful tool for managing thread-safe collections in the world of concurrent programming. We’ve learned how the ConcurrentQueue class delivers efficient and non-blocking solutions for handling data in multi-threaded environments.

With ConcurrentQueue, we can confidently build robust and high-performance multi-threaded software, harnessing the full potential of modern parallelism while ensuring data integrity.